The various feeds were multiplexed for a live transmission via the Eurovision satellite network to RAI’s experimental test bed in the Aosta Valley, Italy, and via the Eurovision fiber infrastructure to the European Championships Broadcast Operations Centre (BOC) based at BBC Glasgow.

MPEG-H Audio was used for several different feeds and the Fraunhofer IIS engineering team worked closely with technology partners ATEME, Jünger Audio, MediaKind, Kai Media, b<>com and Qualcomm to demonstrate the complete set of features and capabilities of the MPEG-H Audio system.

It was very rewarding to see how various devices from different manufacturers supporting MPEG-H Audio work perfectly together in a real-time chain and are fully interoperable: authoring and monitoring units and postproduction plug-ins, contribution links as well as emission and playback devices. This demonstrates once again the benefit of true open standards which allow technology companies to innovate on top of existing standards and offer new and better products to the market.

Three different MPEG-H Audio services were running in parallel, with content produced and delivered live, using available equipment from different manufacturers. Each service was meant to demonstrate how easy it is to enable MPEG-H Audio in existing SDI-based broadcast facilities.

Service 1: “MPEG-H Audio with 1080p100 HFR and HLG video”

The main MPEG-H Audio service was designed to show the benefit of immersive and interactive sound together with High Frame Rate (HFR) and High Dynamic Range (HDR) video for sport events. This offers the viewer a truly immersive experience.

The immersive mix (7.0+4H) was created by BBC, France Télévisions, RAI and ZDF sound engineers using SSL’s new console with support for 3D Immersive Audio.

In addition to the immersive sound bed, four interactive mono object signals were used for two commentaries and two audio descriptions in English and French.

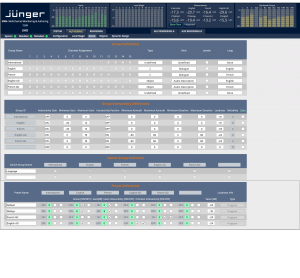

The live metadata authoring of the MPEG-H immersive audio content was made possible through the use of the Multichannel Monitoring and Authoring (MMA) system provided by Jünger Audio. The personalization and interactivity options are enabled through the MPEG-H Audio metadata, which treats individual elements of an audio mix as objects that can be adjusted by the viewer afterwards.

For the live broadcast, the TITAN encoder was provided by the leading video-compression solutions provider Ateme. With integrated support for MPEG-H Audio, Ateme’s encoder enables usage of the immersive and personalization features of MPEG-H for DVB markets.

Service 2: “MPEG-H Audio (HOA) with 1080p100 HFR and HLG video”

Together with Qualcomm, a second MPEG-H service was provided, using scene-based production tools offered by b<>com. The MPEG-H Audio native support for Higher Order Ambisonics (HOA) allows the use of a scene-based bed instead of a channel-based one, so for this service the immersive mix was created using a scene-based (3rd order Ambisonics) bed together with the same additional four objects.

Similar to the main service, a second Jünger MMA system was used for metadata authoring, together with an additional Ateme encoder for the emission.

Service 3: “MPEG-H Audio with 2160p50 HLG video, including a contribution chain”

The third service enabled the usage of a contribution link based on MPEG-H Audio before the UHD emission encoder. The purpose of a contribution link is to provide compression which is relatively transparent and provides robust and secure transport of the metadata, allowing downstream manipulation of the signals and of the metadata. The contribution link consisted of the AVP 2000 Contribution Encoder and MediaKind Content Processing (MKCP) Decoder, both devices manufactured by MediaKind. These are the first MPEG-H capable contribution devices available on the market.

The contribution encoder provides certain authoring capabilities, allowing the broadcaster to manipulate the metadata to be fed into the bitstream. This is done through the intuitive GUI of the encoder.

For the emission part, the Kai Media emission encoder was used. The Korean company was one of the first encoder manufacturers to integrate MPEG-H Audio in its ATSC 3.0 UHD encoder. The company has decided to enable support for DVB based markets as well, thus the Kai Media UHD encoder with MPEG-H support was used in a DVB MPEG2-TS based trial now for the first time.

A third MMA system was provided by Jünger Audio and used between the contribution decoder and the emission encoder for monitoring of the audio scene. The service also provided a different audio setup compared to the other two MPEG-H programs, using a 5.1 downmix together with the two commentaries and audio descriptions provided in English and French.

For all three services, the MPEG-H metadata that was authored enabled users to personalize their audio experience. First of all, they had the option to choose between different versions of the content (presets): a default preset, a dialog enhancement preset and two presets for the audio description. In addition, various interactivity features such as level and position interactivity, were enabled for each preset. During production, the sound engineers had the opportunity to change or adjust live all interactivity features using the MMA systems. They were able to create new presets on the fly, change the interactivity ranges for any available audio object, disable interactivity for some presets, or even completely switch to a different configuration in a seamless way.

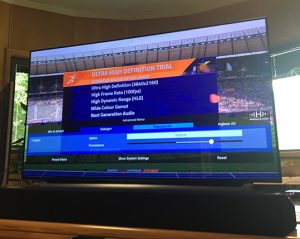

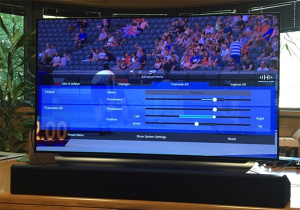

The MPEG-H programs were demonstrated in Berlin, Glasgow and the Aosta Valley, using an immersive soundbar that is able to decode and play back the MPEG-H streams for creating an immersive experience without the need for loudspeakers all around the room. Additionally, with MPEG-H Audio the viewers can switch between different versions of the content, select their preferred language and level of dialogue and use the advanced menus to adjust the balance between the stadium sound and commentary to their personal taste. Moreover, during the demonstration the users were also able to move the audio description “away from the screen” to the left/right or above, providing this way a better separation from the main dialog in the centre channel.

Header image © Fraunhofer IIS